Stanislava Vostrikova: Modern Optimization Methods in Deep Learning

The seminar examined recent optimization algorithms for deep learning, highlighting their impact on training stability and model generalization. Special emphasis was placed on methods effective under limited data and computational resources.

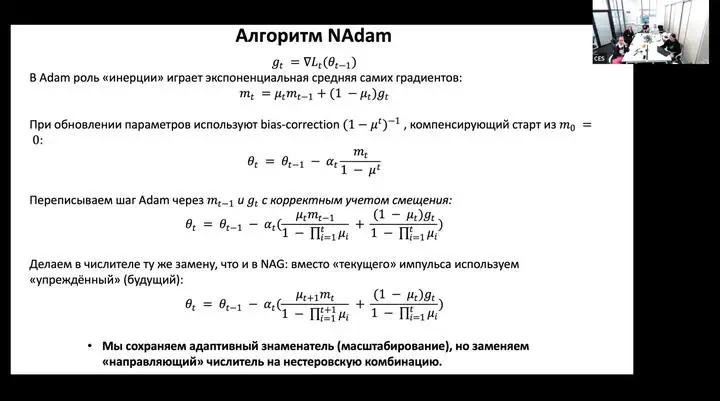

Extended abstract (2–3 paragraphs) Stanislava Vostrikova’s presentation was part of the ongoing seminar series focusing on methodological advances in machine learning for Earth system research. The talk covered several state-of-the-art optimization algorithms — AdamW, NAdam, LARS, and Muon — that shape the efficiency and stability of neural network training. Each method was analyzed in detail, from the decoupled weight regularization in AdamW and Nesterov acceleration in NAdam to layer-wise adaptive scaling in LARS and singular-direction normalization in Muon.

The discussion among participants highlighted that all these optimizers share a common goal: controlling gradient noise and imbalance, both across parameters and between network layers. This challenge is particularly relevant for small, heterogeneous datasets typical in environmental and climate applications. For the laboratory’s research context, where training often occurs under strict data and resource constraints, the most promising approaches were identified as Adam-based variants with improved regularization and momentum dynamics. The seminar concluded by outlining perspectives for future comparative studies on the generalization performance of different optimizers on limited climate datasets.